US AI Regulation, Federal Preemption, Data Privacy, Tech Policy

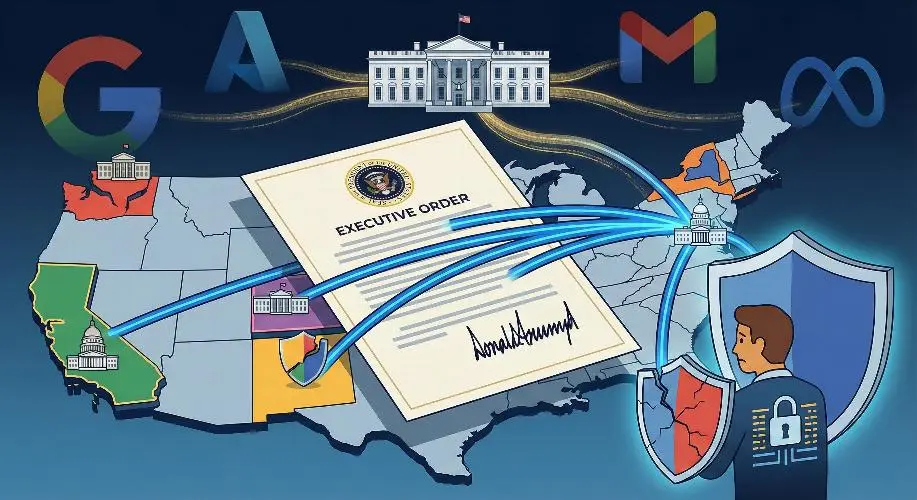

On December 11, 2025, President Donald Trump signed a sweeping Executive Order (EO) designed to consolidate AI regulation at the federal level, effectively effectively aiming to block individual states from enforcing their own AI and data safety laws. This move marks a significant shift in the US regulatory landscape, prioritizing “innovation” and “national competitiveness” over the patchwork of state-by-state protections that currently exist.

The Core Development: Stopping the “Patchwork”

The Executive Order establishes a “minimally burdensome national standard” for Artificial Intelligence. Its primary mechanism is federal preemption, a legal doctrine allowing federal law to override conflicting state laws.

- The Target: The EO specifically targets strict state-level regulations, such as California’s AI safety bills and Colorado’s algorithmic discrimination laws.

- The Mechanism: It creates a Department of Justice “AI Litigation Task Force” to sue states that enact regulations deemed “onerous” or contradictory to the federal pro-innovation stance. It also threatens to withhold federal funding (such as broadband grants) from non-compliant states.

- The Rationale: The administration argues that complying with 50 different state laws stifles American innovation and handicaps the US in the AI arms race against China.

Are Tech Giants Influencing the Federal Government?

The short answer is yes. Current analysis suggests a strong alignment between the strategic goals of major technology companies (“Big Tech”) and the new federal policy.

- Lobbying for Preemption: For years, tech giants like Google, Microsoft, and OpenAI, along with venture capital firms like Andreessen Horowitz, have lobbied for a federal framework. Their argument is that a single national standard—even if it has some rules—is far cheaper and easier to navigate than 50 different, stricter state laws (a “regulatory patchwork”).

- Direct Appointments: The influence is visible in personnel. Figures from the venture capital world, such as David Sacks (appointed as the “AI and Crypto Czar”), have been instrumental in shaping this policy. Their presence signals a direct pipeline from Silicon Valley ideology to White House policy.

- The Trade-off: The industry gets what it wants: the removal of strict state liability laws (like California’s proposed safety testing mandates) in exchange for a lighter, “innovation-friendly” federal oversight.

Impact on the “Data Subject” (The Consumer)

For the individual “data subject”—the regular person whose data is used to train AI—this shift has profound implications.

- Loss of Local Protections: States like California and Illinois have historically been aggressive in protecting data privacy (e.g., CCPA/CPRA, BIPA). If federal rules preempt these, consumers may lose the right to opt-out of automated decision-making or sue for damages if an AI harms them, unless the federal standard explicitly preserves those rights (which currently seems unlikely).

- Weaker Recourse for Bias: Many state laws focus on “algorithmic discrimination” (e.g., an AI denying you a loan based on biased data). The new EO characterizes some of these anti-bias requirements as “compelled speech” or “ideological,” potentially removing the safety nets that protect minorities and vulnerable groups from automated bias.

- Uniformity vs. Safety: While a national standard offers clarity (you know the rules are the same everywhere), it often represents a “floor” rather than a “ceiling.” The level of protection for your data will likely be lower than what the strictest states previously offered.

Q&A: Understanding the New AI Landscape

Q: Does this Executive Order immediately cancel all state privacy laws?

A: No, not immediately. It sets up a legal battle. The EO directs the DOJ to challenge specific AI laws that conflict with federal policy. However, because AI and data privacy are deeply intertwined, state data privacy laws could be targeted if they are seen as hindering AI development.

Q: Why do Tech Giants prefer one federal law over state laws?

A: Compliance costs. It is incredibly expensive to build different AI models for California, Texas, and New York. A single federal law, especially a “light-touch” one, reduces their legal risk and operational costs, allowing them to release products faster.

Q: What happens if I live in a state like California with strong AI laws?

A: Your state creates a “constitutional clash.” California will likely defend its laws in court. Until the Supreme Court rules on whether the Federal Government has the right to preempt these specific state powers, the status of your local protections remains uncertain.

Q: Does this mean my data is less safe?

A: Likely, yes. The stated goal of the EO is to reduce “burdensome” regulations. In the context of data, “burdensome” often refers to the requirements companies must follow to keep your data private or to explain how their AI made a decision about you.

Q: Can states fight back?

A: Yes. States can sue the federal government, arguing that consumer protection is a traditional “police power” of the states. This will likely result in a lengthy legal battle that could reach the Supreme Court

While the formation of Department of Justice “AI Litigation Task Force” seems to concentrate on non compliant states which department will regulate the tech giants?

Click here for a free consultation to align your AI framework with both state and Federal standards.