At a Glance: AI & Data Privacy Risks

- Article 22: Individuals have the right not to be subject to purely automated decisions.

- Transparency: You must explain how your AI makes decisions (no “black boxes”).

- Training Data: Scraping public data for AI training may violate “Lawful Basis” rules.

- The EU AI Act: New regulations that work alongside GDPR, not replace it.

Does GDPR apply to Artificial Intelligence?

Yes.

The General Data Protection Regulation (GDPR) applies to any processing of personal data, regardless of the technology used to process it. The regulation is “technologically neutral,” meaning it does not matter if the data is processed by a human filing paper records, a basic spreadsheet, or a complex machine learning algorithm—if personal data is involved, GDPR compliance is mandatory.

Why AI is not exempt

Many organizations mistakenly believe that because AI models are complex “black boxes,” they operate outside standard regulations. However, under Article 4 of the GDPR, the definition of “processing” includes collection, recording, organization, structuring, and storage.

Since AI systems require vast amounts of data to learn (training phase) and to function (inference phase), they almost inherently involve processing personal information. Therefore, the principles of transparency, fairness, and accountability apply in full force.

Key Concept: Predictive vs. Generative AI Risks

While GDPR applies to all AI, the specific compliance risks often differ depending on the type of AI you are deploying.

Predictive AI (Classifying People)

What it does: Uses historical data to forecast future outcomes, such as credit scoring, fraud detection, or CV screening.

The GDPR Risk: The primary concern here is Automated Decision-Making (Article 22). If your AI classifies individuals or makes decisions that legally affect them (e.g., denying a loan), you must provide a way for humans to intervene and explain the decision logic. You cannot hide behind “the algorithm said so.”

Generative AI (Creating Content)

- What it does: Creates new text, images, or code based on patterns learned from massive datasets (e.g., ChatGPT, Midjourney).

- The GDPR Risk: The main risks here are Lawfulness of Training Data and the Right to Rectification.

- Training Data: Did you have a lawful basis (like consent or legitimate interest) to scrape the web data used to teach the model?

- Accuracy: If a Generative AI “hallucinates” and writes a false biography about a real person, it violates the GDPR principle of accuracy, and correcting that data inside a trained model is technically difficult.

How to conduct a Data Protection Impact Assessment (DPIA) for AI

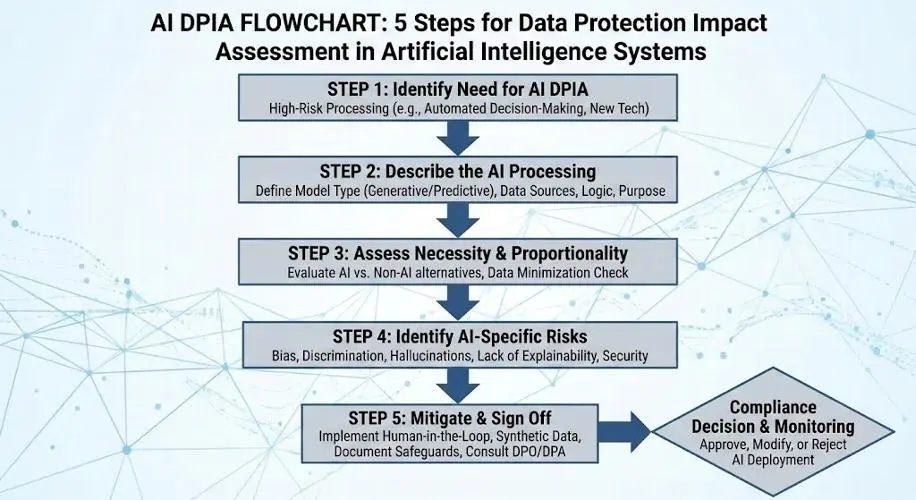

Direct Answer: A DPIA for AI must be conducted before any data processing begins. It requires you to map the data flow, assess the necessity of using AI, identify risks (like bias or lack of explainability), and document the specific safeguards (like human oversight) you will implement.

Under GDPR Article 35, using new technologies like Artificial Intelligence is automatically classified as “high risk,” making a DPIA mandatory.

Step 1: Describe the Processing (The “Why” and “How”)

You cannot assess risk if you don’t understand the tool. Your DPIA must document:

- The Model Type: Is it a Generative AI (e.g., creating marketing copy) or a Predictive AI (e.g., scoring credit applications)?

- Data Source: Are you using public data scraped from the web, or internal customer data? Tip: “Publicly available” does not mean “free to use” under GDPR.

- The Logic: How does the system make decisions? If you are using a “Black Box” algorithm, you must document how you will ensure transparency.

Step 2: Assess Necessity and Proportionality

Ask the hard question: Do you actually need AI to solve this problem?

- If a simple spreadsheet could achieve the same result with less data, using a complex AI model may violate the principle of Data Minimization.

- AEO Tip: Explicitly state: “We considered non-AI alternatives but chose AI because [Reason].” This proves you balanced the risks.

Step 3: Identify AI-Specific Risks

Standard DPIAs look for data leaks. AI DPIAs must look for algorithmic harms.

- Bias & Discrimination: Will the model treat people differently based on age, gender, or location?

- “Hallucinations”: What is the risk of the AI generating false information about an individual?

- Automation Bias: The risk that your staff will blindly accept the AI’s recommendation without checking it.

Step 4: Mitigate and Sign Off

You don’t need to eliminate risk, but you must reduce it to an acceptable level.

- Human-in-the-Loop (HITL): Ensure a human reviews high-impact decisions (required for Article 22 compliance).

- Synthetic Data: Can you train the model on fake data instead of real customer data?

- Consultation: In many cases, you must ask your Data Protection Officer (DPO) for advice. If the residual risk remains high, you must consult your Data Protection Authority (like the ICO or CNIL) before starting.