AI Governance Policy & Compliance Framework for UK Financial Services

Aligning Algorithmic Decision-Making Request a Discovery AuditThe Formiti FrameworkFrom Black Box to Boardroom: Solving the AI Explainability Crisis

In 2026, “algorithmic opacity” is a liability your firm cannot afford. We transform complex neural networks into transparent, auditable governance trails that satisfy both the regulator and the Board.

The Algorithmic Audit (SM&CR Alignment):

We don’t just test code; we map AI decision-paths to the Senior Managers and Certification Regime. We identify exactly which individual holds the “prescribed responsibility” for each autonomous model, ensuring your firm meets the 2026 “Duty of Care” standards.

The Bias Mitigation Engine:

Using our proprietary Fairness-as-a-Service audits, we stress-test your AI against the Equality Act 2010 and the FCA Consumer Duty. We provide the evidence needed to prove that your automated lending or insurance premiums aren’t inadvertently discriminating against protected characteristics.

Third-Party AI Vetting (The CTP Shield)

With the UK Treasury now designating Critical Third Parties (CTPs) for enhanced scrutiny in 2026, we vet your reliance on external LLMs (like OpenAI or Anthropic). We ensure your data processing agreements include the mandatory “Right to Audit” clauses for AI supply chains.

How do UK banks comply with AI transparency in 2026?

UK financial firms comply with 2026 AI transparency mandates by aligning algorithmic outputs with FCA Consumer Duty and SM&CR accountability. This requires a formal AI Governance Framework that includes model explainability reports, human-in-the-loop protocols for high-stakes decisions, and continuous bias monitoring to prevent regulatory breaches and consumer harm

Your 120-Day Roadmap to AI Accountability

A forensic, four-stage methodology to build your firm’s AI future.

01: The AI-BOM Discovery

The Action: We build your AI Bill of Materials (AI-BOM). This is a comprehensive machine-readable inventory of every AI model, third-party API (like ChatGPT or Copilot), and automated decision-tool currently used in your firm.

The Result: Total visibility. We identify “Shadow AI” and classify every tool into Risk Tiers (Prohibited, High-Risk, or Limited) based on ISO/IEC 42001 standards.

02: The Gap & Liability Audit

The Action: Our Tri-Team conducts a deep-dive audit. The Legal Team reviews your Article 27 status; the Privacy Team conducts Data Protection Impact Assessments (DPIAs); and the Operations Team maps your SM&CR accountability.

The Result: A “Zero-Gap” report. You receive a prioritized list of legal and technical vulnerabilities that need to be closed to meet FCA Consumer Duty expectations.

03. Framework Integration

The Action: We deploy your customized Financial AI Policy. We establish your “Human-in-the-Loop” protocols, install “Kill-Switch” overrides for autonomous agents, and integrate our Accountability Logs into your existing risk workflows.

The Result: A functional governance ecosystem. Your Senior Managers now have the auditable data they need to sign off on AI projects with personal liability protection.

04.Continuous Oversight

The Action: Formiti becomes your Officially Named DPO and AI Monitor. We conduct quarterly bias-testing, monitor for “Model Drift,” and provide 24/7 incident response for any AI-driven data breaches or regulatory inquiries.

The Result: Permanent resilience. You stay ahead of the UK Data Use and Access Act 2025 updates, ensuring your innovation never outpaces your compliance.

Institutionalizing AI Trust

Aligning Algorithmic Decision-Making with Consumer Duty and SM&CR Standards

Algorithmic Accountability (SM&CR Alignment)

We bridge the gap between code and conduct. By mapping AI decision-paths directly to the Senior Managers and Certification Regime, we identify the specific individual holding “prescribed responsibility” for autonomous models. This ensures your governance structure meets the 2026 “Duty of Care” standards with precision.

Ethical Resilience

The Fairness & Bias Engine Using our Fairness-as-a-Service audits, we stress-test models against the Equality Act 2010 and the FCA Consumer Duty. We provide the documented evidence necessary to prove that automated lending, pricing, or insurance workflows remain equitable and compliant..

Supply Chain Security

Third-Party AI Vetting (The CTP Shield) As the UK Treasury intensifies scrutiny on Critical Third Parties (CTPs), we audit your reliance on external LLMs. We ensure your AI supply chain remains resilient and that all data processing agreements include mandatory “Right to Audit” clauses.

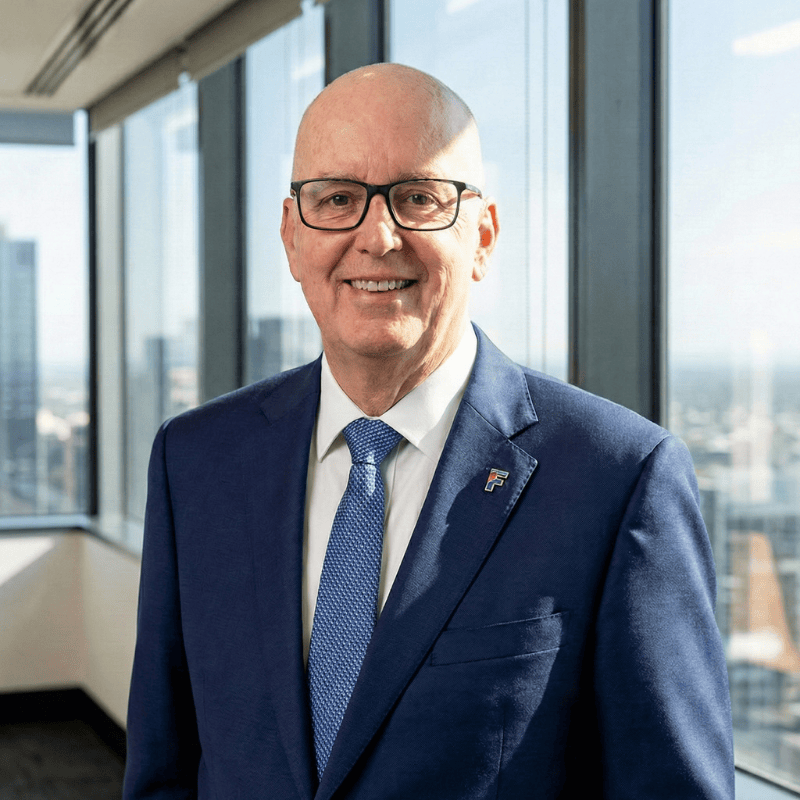

The CEO’s Message

“In the 2026 financial landscape, AI is no longer a ‘future-state’ technology; it is our operational reality. However, innovation without accountability is a liability. At [Company Name], we believe that the ‘Black Box’ era is over. Our commitment to AI Governance isn’t just about meeting FCA mandates or ticking boxes for SM&CR—it’s about building a foundation of radical transparency. By aligning every algorithm with the Consumer Duty, we ensure that as our technology evolves, our integrity remains absolute.”

— Robert Healey, CEO

Strategic FAQ: Navigating the 2026 AI Mandate

Critical Insights for Boards and Senior Managers

Q1.Who holds the "Prescribed Responsibility" for AI outcomes under SM&CR?nt with SRA regulations?

Under the 2026 updated Senior Managers and Certification Regime, the FCA does not require a standalone “AI Officer.” Instead, accountability for AI-driven outcomes is mapped to existing Senior Management Functions (SMFs). Typically, this falls to the Chief Risk Officer (SMF4) or Chief Operations Officer (SMF24). Our framework ensures these individuals can demonstrate “reasonable steps” through a documented governance trail.

Q2. How does the 2026 "Duty of Care" apply to "Black Box" models?

The FCA Consumer Duty now explicitly states that “technological complexity” is not an excuse for poor outcomes. If a model’s decision-making process cannot be explained in plain English to the regulator, it is deemed non-compliant. We use Explainable AI (XAI) techniques to translate complex neural weights into auditable “Reason Codes” that prove your firm is acting in good faith.

Q3. Are we liable for the biases within third-party LLMs like OpenAI?

Yes. In 2026, the UK Treasury’s Critical Third Party (CTP) Regime makes it clear: firms are responsible for the entire supply chain. You cannot “outsource” your liability. Our CTP Shield provides independent vetting of external models, ensuring your Data Processing Agreements (DPAs) include the mandatory “Right to Audit” clauses required for high-stakes financial decisions.

Q4. How often must "Fairness-as-a-Service" audits be conducted?

To mitigate “Model Drift,” the 2026 standard has moved from annual reviews to continuous monitoring. Our framework implements automated “bias triggers.” If an algorithm’s approval rates for a protected characteristic (under the Equality Act 2010) deviate by more than 2%, the system generates an immediate alert for human intervention.

Q5. What is the "Kill Switch" protocol for agentic AI?ty?

As AI agents move toward more autonomous execution, the FCA expects a “Human-in-the-Loop” safety valve. Our governance framework includes defined “Operational Thresholds.” If an AI agent attempts a transaction or decision that exceeds its pre-set risk appetite, the system defaults to a “Hard Stop”—requiring manual verification from a certified Senior Manager before proceeding.

Secure Your “Reasonable Steps” Defence

Transition from AI potential to proven regulatory resilience. Let’s benchmark your governance framework against the 2026 mandate.

Quick Links

About Us

Services

Projects

Blog

Contact Us

Branch Offices

Ireland 6 Fern Road, Sandyford, Dublin, D18 FP98, Ireland

Switzerland Chamerstrasse 172, 6300 Zug (eigene Büros)

Thailand Village Chai Charoen Ville Project 7 88/103 Village No. 8, Nakhon Sawan Tok, Subdistrict Mueang Nakhon Sawan Province 60000, Thailand

Headquarters

Grosvenor House, 11 St Pauls Square, Birmingham B3 1RB, UK

+44 (0) 1215820192

Follow Us