Introduction

The European Union’s Artificial Intelligence Act (EU AI Act) stands as a landmark piece of legislation, aiming to regulate AI systems to ensure safety, transparency, and accountability. For businesses navigating this terrain, understanding the nuances of various AI entities and the categorisation of AI systems is crucial. This article provides a comprehensive breakdown of these categories, offering insights into where businesses might fall under the EU AI Act. Additionally, we will explore the risk matrix associated with different AI systems.

AI Entities under the EU AI Act

1. Providers: These entities develop AI systems and introduce them into the market. Providers are responsible for ensuring their AI systems comply with the EU AI Act’s requirements.

2. Deployers (Formerly Users): Deployers are entities or individuals who use AI systems in their operational environment. They will need to use the AI systems following the provider’s instructions and ensure they follow the Act’s stipulations.

3. Authorised Representatives: These entities within the EU, designated by providers outside the EU, to act on their behalf. They ensure compliance with the EU AI Act and are a point of contact for regulatory authorities.

4. Product Manufacturers: If an AI system is integrated into a product, the manufacturer of that product is responsible for ensuring the compliance of the entire product, including the AI component, with the EU AI Act.

5. Distributors: Distributors are entities that make AI systems available on the market. They must verify that the AI systems have the necessary conformity markings and that the provider has fulfilled all obligations under the Act.

6. Importers: Importers bring AI systems from third countries into the EU market. They must ensure these systems comply with the EU AI Act before placing them on the market.

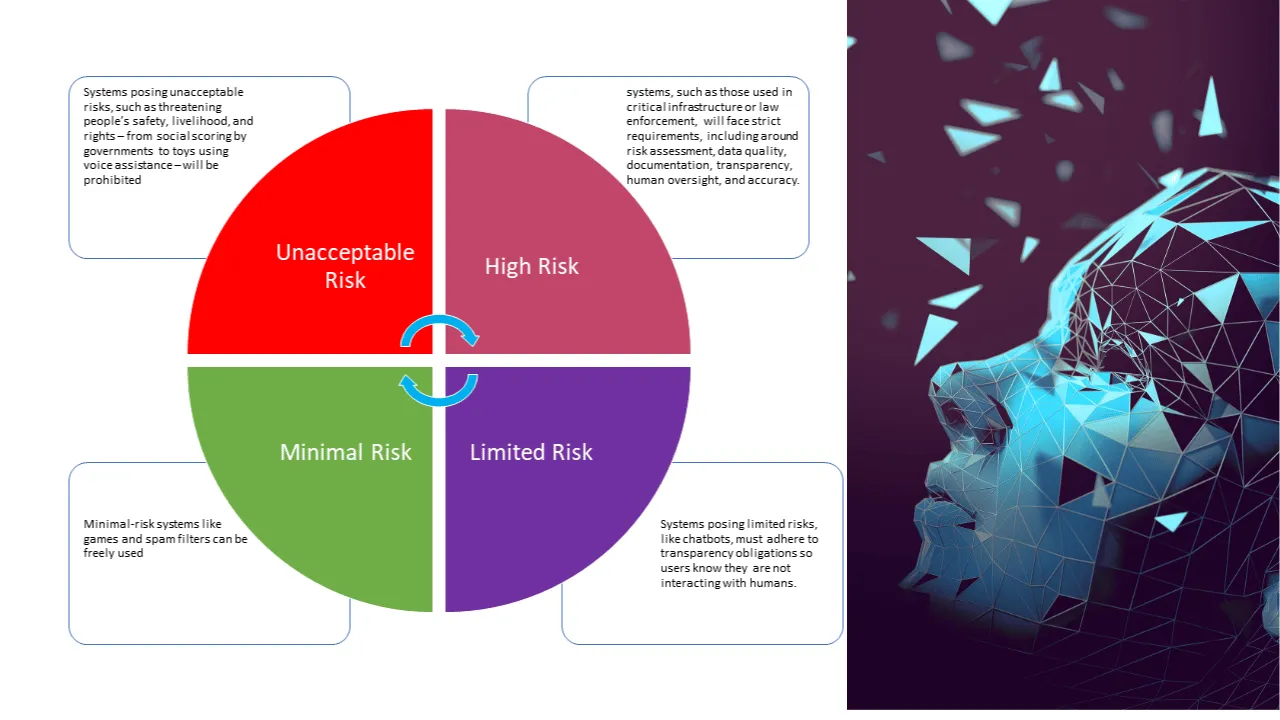

Categories of AI Systems and the Risk Matrix

The EU AI Act categorises AI systems based on the level of risk they pose:

- Unacceptable Risk: These AI systems are considered a clear threat to individuals’ safety, livelihoods, and rights. They are prohibited from being used.

- High Risk: This category includes AI systems used in critical infrastructures, employment, essential private and public services, law enforcement, migration, asylum, border control management, and administration of justice and democratic processes. These systems are subject to stringent compliance and transparency requirements.

- Limited Risk: AI systems that interact with humans (e.g., chatbots) fall under this category. While they pose a lower risk, transparency obligations must be met.

- Minimal Risk: Most AI applications fall into this category. The EU AI Act does not impose any additional legal requirements for these systems, but they are encouraged to adhere to codes of conduct.

Conclusion: The Benefit of Formiti’s AI Assessment Service

The Formiti AI Assessment Service emerges as an invaluable business resource in this complex landscape. Our service offers a comprehensive evaluation of your AI systems in the context of the EU AI Act. We identify the category of AI entity your business represents and the classification of your AI systems within the risk matrix. By doing so, we ensure that your AI applications are compliant and optimised for ethical and responsible use. Our expertise in global data protection laws positions us uniquely to guide you through this evolving regulatory environment.